Edge AI: Empowering Real-Time Decision Making with FPGA

Edge AI: Empowering Real-Time Decision Making with FPGA

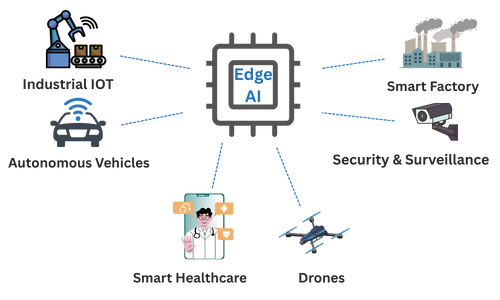

As artificial intelligence (AI) becomes increasingly integrated into our lives, the focus is shifting from centralized cloud computing to real-time, on-device intelligence—a trend known as Edge AI. From autonomous vehicles and drones to smart cameras and wearable medical devices, edge AI systems are enabling faster decisions by processing data locally, rather than relying on a distant server. A key enabler of this capability is Field-Programmable Gate Arrays (FPGAs), which offer the low-latency, high-efficiency, and reconfigurable hardware required to meet the demanding needs of edge environments.

Here, we explore how FPGAs empower edge AI applications by comparing them with traditional processing platforms like Central Processing Units (CPUs) and Graphics Processing Units (GPUs). Through this lens, we’ll see why FPGAs are gaining momentum in scenarios where real-time decision-making is critical.

The Shift Toward Edge AI

Traditional AI applications rely heavily on powerful cloud servers for training and inference. While the cloud offers scalability and computational strength, it comes with inherent drawbacks: latency, security risks, and dependency on internet connectivity. For time-sensitive applications—like object detection in autonomous vehicles or anomaly detection in industrial systems—even milliseconds of delay can lead to undesirable outcomes.

Edge AI addresses these challenges by moving computation closer to the source of data. Instead of sending information to the cloud, devices analyze it on the spot, enabling instant feedback. However, running AI models locally requires specialized hardware capable of meeting the tight constraints of low latency, low power, and high reliability. This is where FPGAs shine.

What is an FPGA?

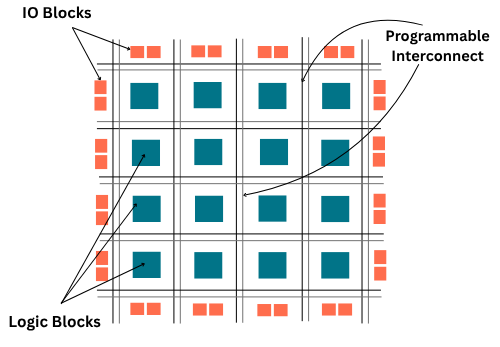

An FPGA is a semiconductor device that can be reprogrammed after manufacturing to perform specific tasks. Unlike CPUs, which are fixed-function processors optimized for sequential tasks, and GPUs, which are designed for parallel tasks with a fixed architecture, FPGAs offer custom hardware acceleration tailored to a specific workload.

This flexibility enables developers to design hardware logic for AI inference, allowing for the efficient execution of deep learning models with predictable latency, deterministic performance, and excellent energy efficiency.

Comparing CPUs, GPUs, and FPGAs in AI Applications

Understanding the strengths and weaknesses of each hardware type is essential when choosing the right platform for AI workloads. Below are two key tables that summarize their characteristics in general and AI-specific contexts.

Table 1: Hardware Characteristics

| Feature | CPU | GPU | FPGA |

|---|---|---|---|

| General Purpose | Yes | No (optimized for parallel workloads) | No (reconfigurable for specific tasks) |

| Programmability | Very High | High | Medium (HDL or HLS required) |

| Parallelism | Limited (few cores) | Massive (hundreds-thousands of cores) | Customizable (can be deeply parallelized) |

| Latency | Moderate | Moderate to High | Very Low (custom pipelines possible) |

| Throughput | Low to Medium | High | High (depends on design) |

| Power Efficiency | Low | Medium | High |

| Flexibility | High | Medium | Medium to Low (recompile to change logic) |

Why FPGAs Excel in Edge AI

1. Deterministic Real-Time Performance

FPGAs enable the creation of custom data paths and deeply pipelined architectures, ensuring predictable and real-time performance. This deterministic nature is crucial in safety-critical systems like collision avoidance in drones or process control in industrial automation, where any unpredictability can lead to failure.

2. Low Latency and High Throughput

Unlike CPUs and GPUs, where data must flow through multiple software and hardware abstraction layers, FPGAs execute logic directly in hardware. This eliminates the overhead, enabling extremely low inference latencies—often measured in microseconds. They are especially effective in streaming applications where continuous data flows (like video or sensor inputs) need to be processed on the fly.

3. Energy Efficiency

Power consumption is a major constraint at the edge. GPUs, while powerful, are not optimized for low-power environments. FPGAs, on the other hand, consume significantly less energy for the same task due to their specialized logic and parallel processing, making them ideal for battery-operated or thermally constrained devices.

4. Hardware Customization

FPGAs can be tailored to specific neural network architectures. For example, developers can implement only the layers and operations required, removing all unnecessary logic. This fine-tuned customization boosts both speed and efficiency—something fixed-architecture GPUs cannot do.

Table 2: AI-Specific Performance

| Criteria | CPU | GPU | FPGA |

|---|---|---|---|

| Training | Best for large model training (deep learning, CNNs, transformers) | Best for large model training (deep learning, CNNs, transformers) | Rarely used for training; possible but inefficient |

| Inference | OK for low-load, low-latency needs | Good for high-throughput inference | Excellent for ultra-low latency & edge inference |

| Batch Processing | Less efficient | Highly efficient | Can be customized for optimal batch sizes |

| Streaming/Real-Time AI | Slower response times | Good, but can be limited by memory access patterns | Good, but can be limited by memory access patterns |

| Edge Deployment | Power-hungry | Better than CPU, still not ideal | Ideal for low-power, embedded applications |

Challenges of Using FPGAs

Despite their advantages, FPGAs come with trade-offs. Programming them is more complex and often requires knowledge of Hardware Description Languages (HDLs) like VHDL or Verilog, or high-level synthesis (HLS) tools like Xilinx Vitis. Development time is longer, and debugging hardware logic is less intuitive than debugging software.

Real-World Applications of FPGA-Based Edge AI

- Smart Surveillance: FPGAs power edge cameras that detect threats or suspicious behaviour in real time without streaming data to the cloud.

- Autonomous Drones: With limited payload and battery life, drones benefit from FPGAs’ lightweight, low-power, high-speed AI inference.

- Medical Devices: In wearables or portable scanners, FPGAs deliver diagnostic insights instantly while preserving patient data privacy.

- Industrial Automation: FPGAs enable real-time control in robotics, predictive maintenance, and quality assurance systems.

Conclusion

As AI continues its migration from the cloud to the edge, hardware selection becomes increasingly important. While CPUs and GPUs have their place in AI pipelines—especially in training and cloud inference—FPGAs offer a uniquely powerful solution for edge AI. Their reconfigurable logic, ultra-low latency, and energy efficiency make them ideal for real-time decision-making in resource-constrained environments.

Though not without their challenges, modern tools and ecosystem support are making FPGA development more accessible. For organizations and developers building the next generation of responsive, intelligent edge devices, FPGAs represent a compelling platform that combines performance, precision, and practicality.

Explore how we bring intelligence to the edge—visit our Services page or book a free consultation to see how embedded AI can elevate your next product.

– The Senologic Team